8 Advanced parallelization - Deep Learning with JAX

Por um escritor misterioso

Descrição

Using easy-to-revise parallelism with xmap() · Compiling and automatically partitioning functions with pjit() · Using tensor sharding to achieve parallelization with XLA · Running code in multi-host configurations

Intro to JAX for Machine Learning, by Khang Pham

Efficiently Scale LLM Training Across a Large GPU Cluster with

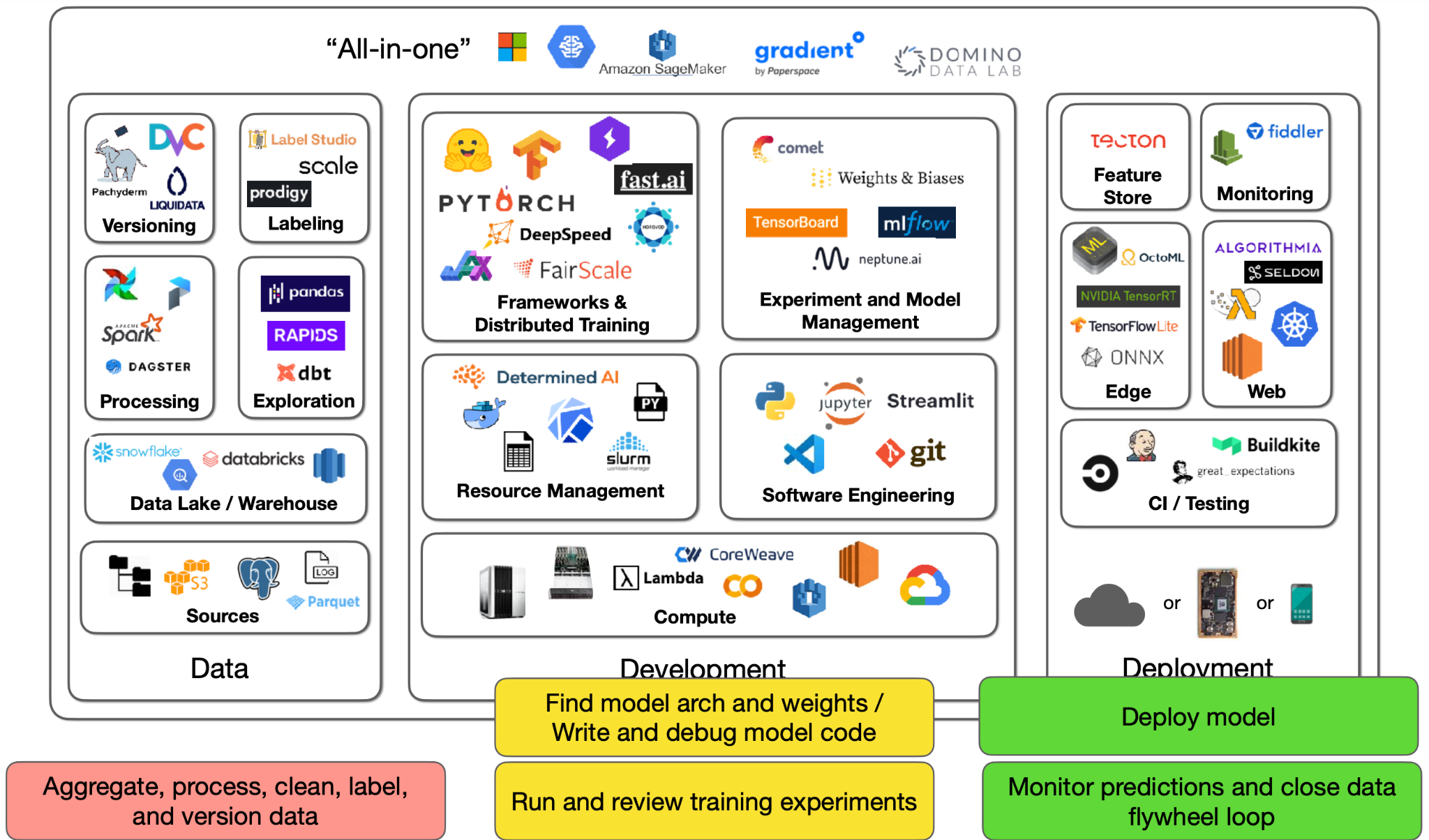

Lecture 2: Development Infrastructure & Tooling - The Full Stack

Tutorial 2 (JAX): Introduction to JAX+Flax — UvA DL Notebooks v1.2

Learn JAX in 2023: Part 2 - grad, jit, vmap, and pmap

Learn JAX in 2023: Part 2 - grad, jit, vmap, and pmap

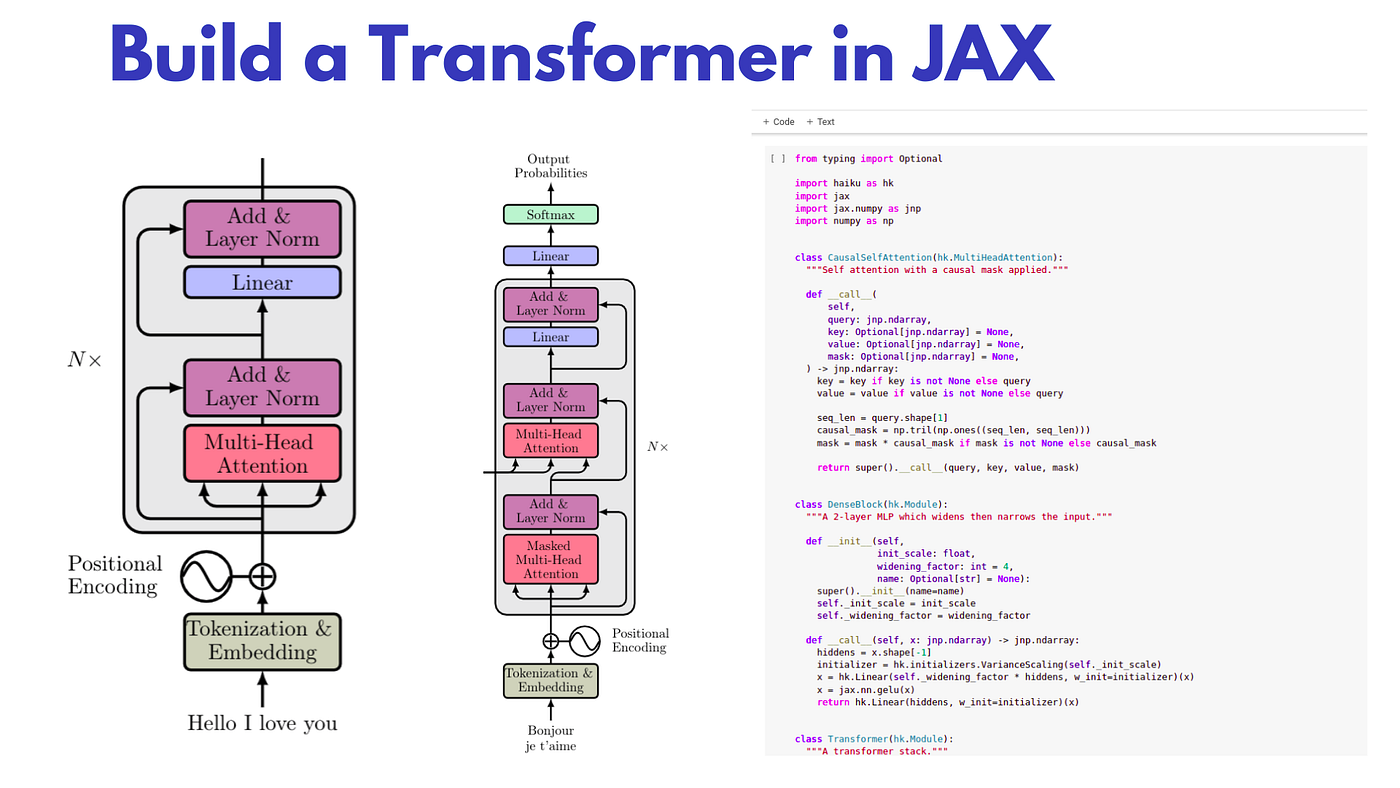

Build a Transformer in JAX from scratch

8 Advanced parallelization - Deep Learning with JAX

Compiler Technologies in Deep Learning Co-Design: A Survey

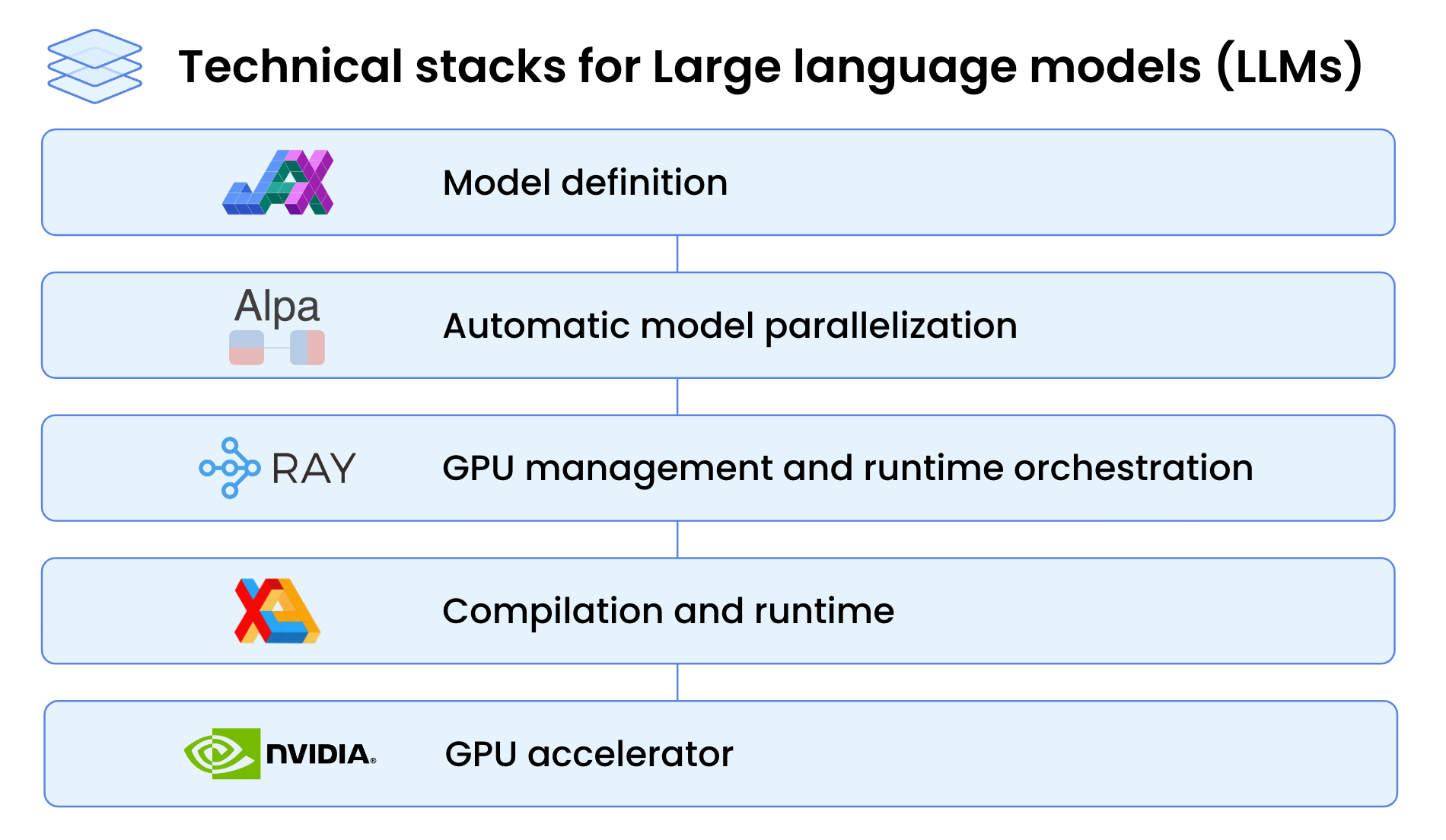

High-Performance LLM Training at 1000 GPU Scale With Alpa & Ray

de

por adulto (o preço varia de acordo com o tamanho do grupo)