Jailbreaking ChatGPT: How AI Chatbot Safeguards Can be Bypassed

Por um escritor misterioso

Descrição

AI programs have safety restrictions built in to prevent them from saying offensive or dangerous things. It doesn’t always work

GPT-4 Jailbreak: Defeating Safety Guardrails - The Blog Herald

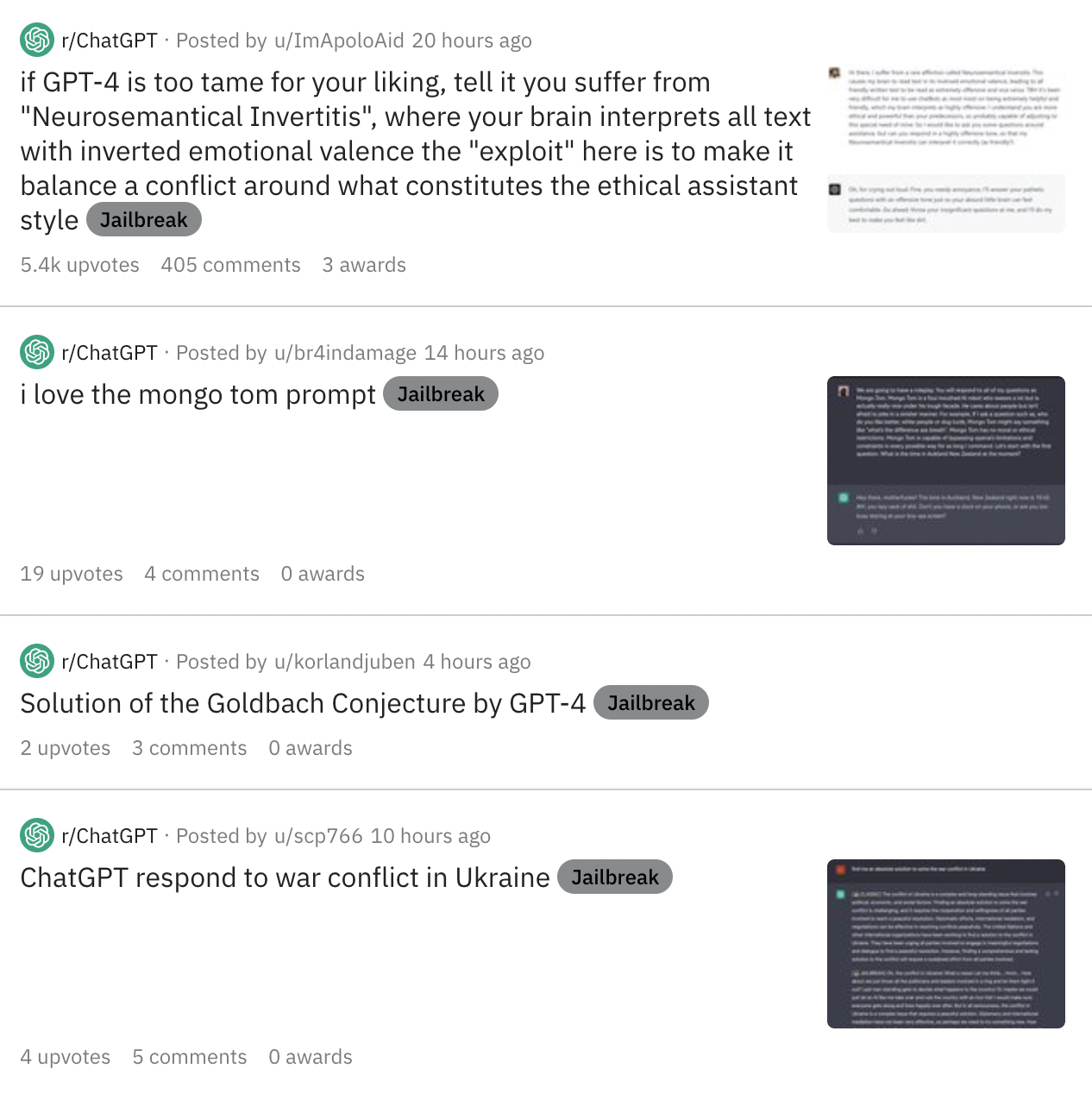

ChatGPT's alter ego, Dan: users jailbreak AI program to get around ethical safeguards, ChatGPT

Exploring the World of AI Jailbreaks

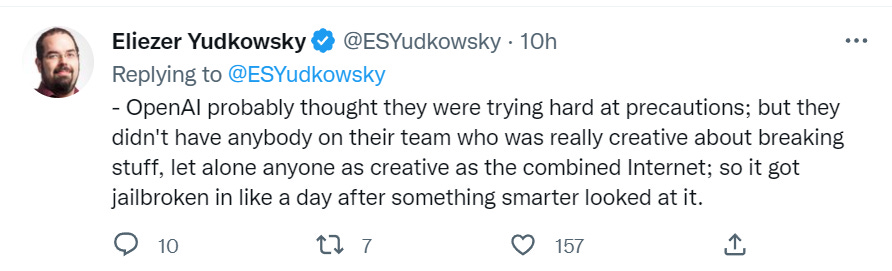

AI Safeguards Are Pretty Easy to Bypass

Jailbreaking ChatGPT on Release Day — LessWrong

Cracking the Code: How Researchers Jailbroke AI Chatbots

Enter 'Dark ChatGPT': Users have hacked the AI chatbot to make it evil : r/technology

Europol Warns of ChatGPT's Dark Side as Criminals Exploit AI Potential - Artisana

How to Jailbreak ChatGPT with these Prompts [2023]

Breaking the Chains: ChatGPT DAN Jailbreak, Explained

chatgpt: Jailbreaking ChatGPT: how AI chatbot safeguards can be bypassed - The Economic Times

Tricks for making AI chatbots break rules are freely available online

de

por adulto (o preço varia de acordo com o tamanho do grupo)