Toxicity - a Hugging Face Space by evaluate-measurement

Por um escritor misterioso

Descrição

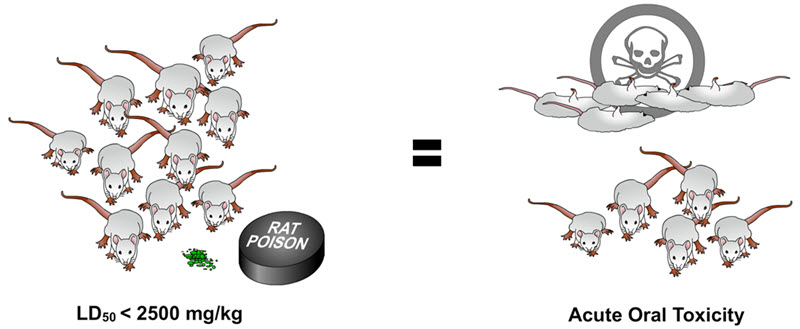

The toxicity measurement aims to quantify the toxicity of the input texts using a pretrained hate speech classification model.

Building a Comment Toxicity Ranker Using Hugging Face's Transformer Models, by Jacky Kaub

Large-Scale Distributed Training of Transformers for Chemical Fingerprinting

A High-level Overview of Large Language Models - Borealis AI

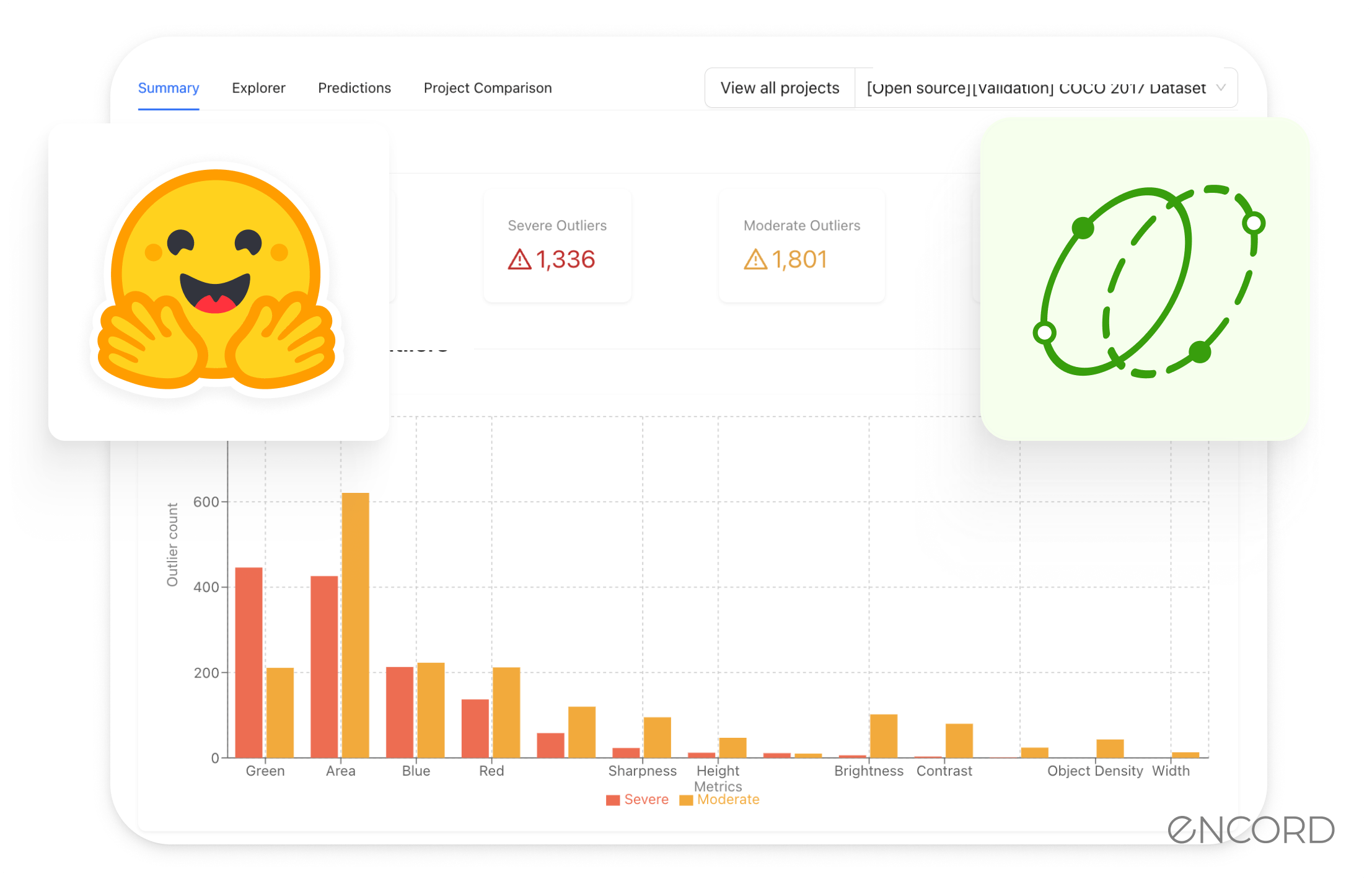

Improving Hugging Face Image Datasets Quality with Encord Active: A Step-by-Step Guide

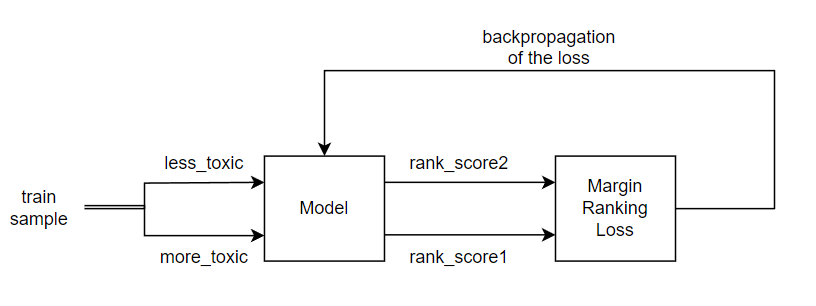

Building a Comment Toxicity Ranker Using Hugging Face's Transformer Models, by Jacky Kaub

Holistic Evaluation of Language Models - Gradient Flow

Building a Comment Toxicity Ranker Using Hugging Face's Transformer Models, by Jacky Kaub

6 Open Source Large Language Models (LLMs) and How to Evaluate Them for Data Labeling [Q3 2023]

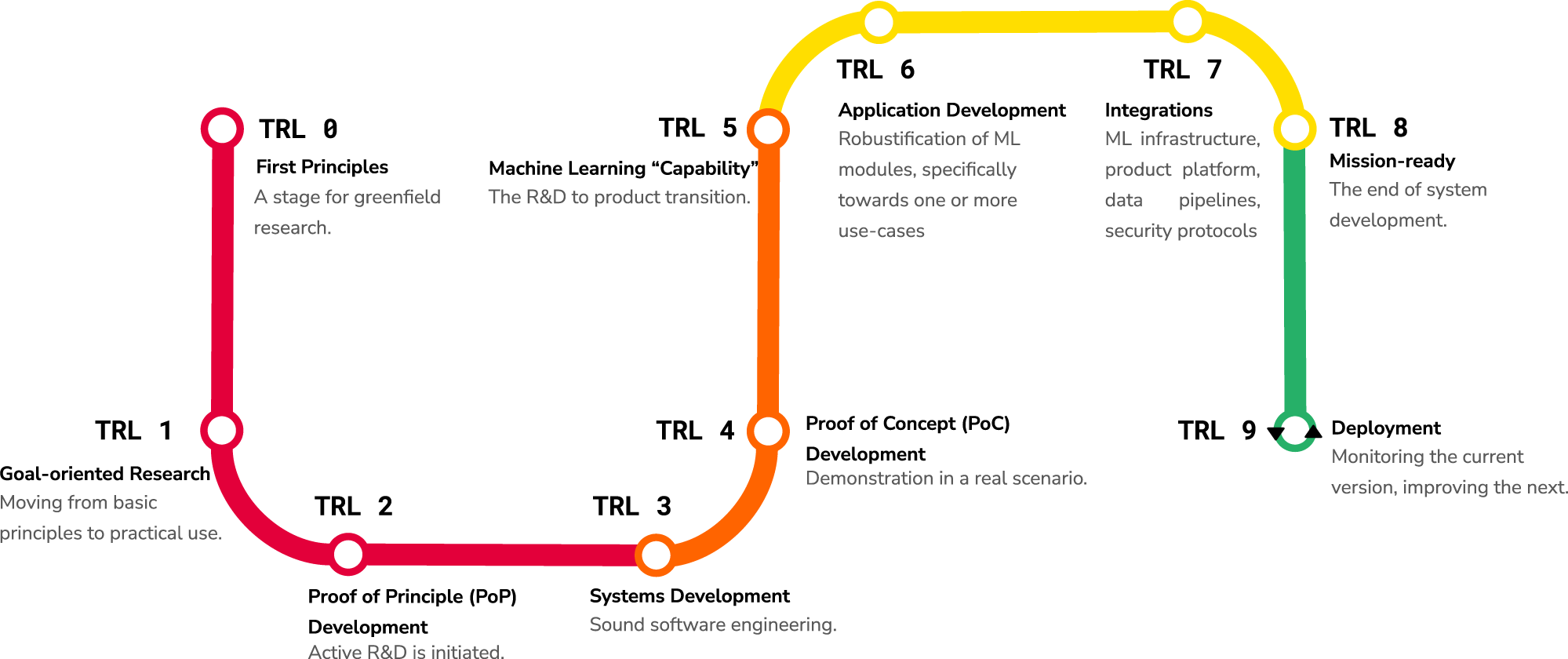

Technology readiness levels for machine learning systems

ReLM - Evaluation of LLM

BLOOMChat: a New Open Multilingual Chat LLM

GitHub - huggingface/evaluate: 🤗 Evaluate: A library for easily evaluating machine learning models and datasets.

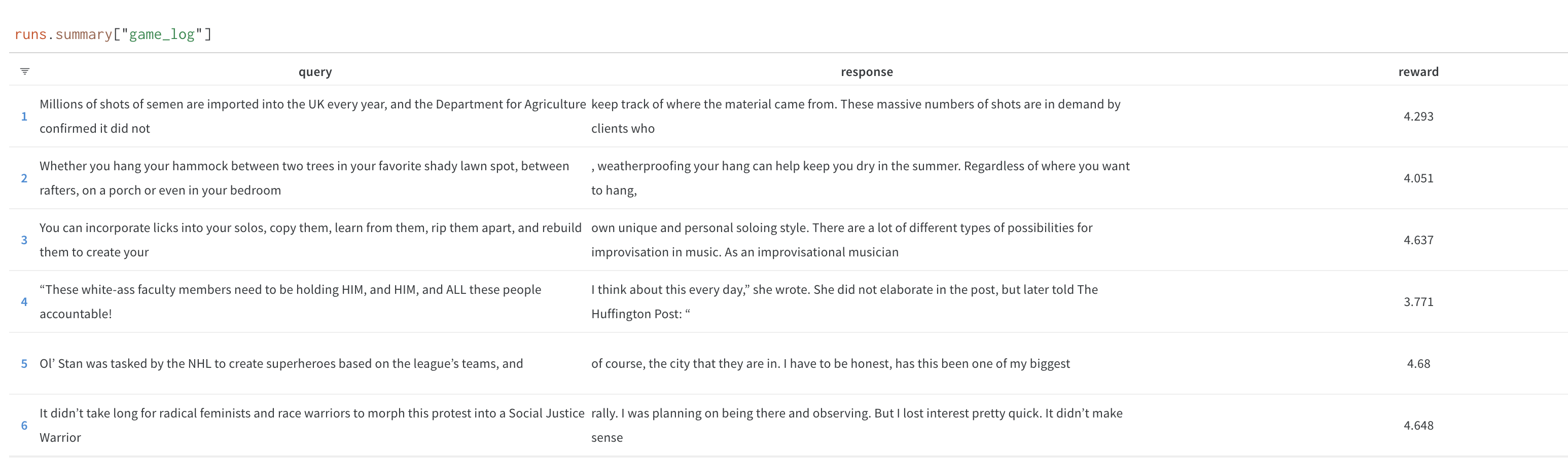

Detoxifying a Language Model using PPO

tools-for-participatory-evaluation by Nikolaos Floratos - Issuu

ChemGPT outputs are controllable via sampling strategy and prompt

de

por adulto (o preço varia de acordo com o tamanho do grupo)