Researchers Use AI to Jailbreak ChatGPT, Other LLMs

Por um escritor misterioso

Descrição

quot;Tree of Attacks With Pruning" is the latest in a growing string of methods for eliciting unintended behavior from a large language model.

Jailbreaking LLM (ChatGPT) Sandboxes Using Linguistic Hacks

What is Jailbreaking in AI models like ChatGPT? - Techopedia

AI researchers have found a way to jailbreak Bard and ChatGPT

Jailbreaking large language models like ChatGP while we still can

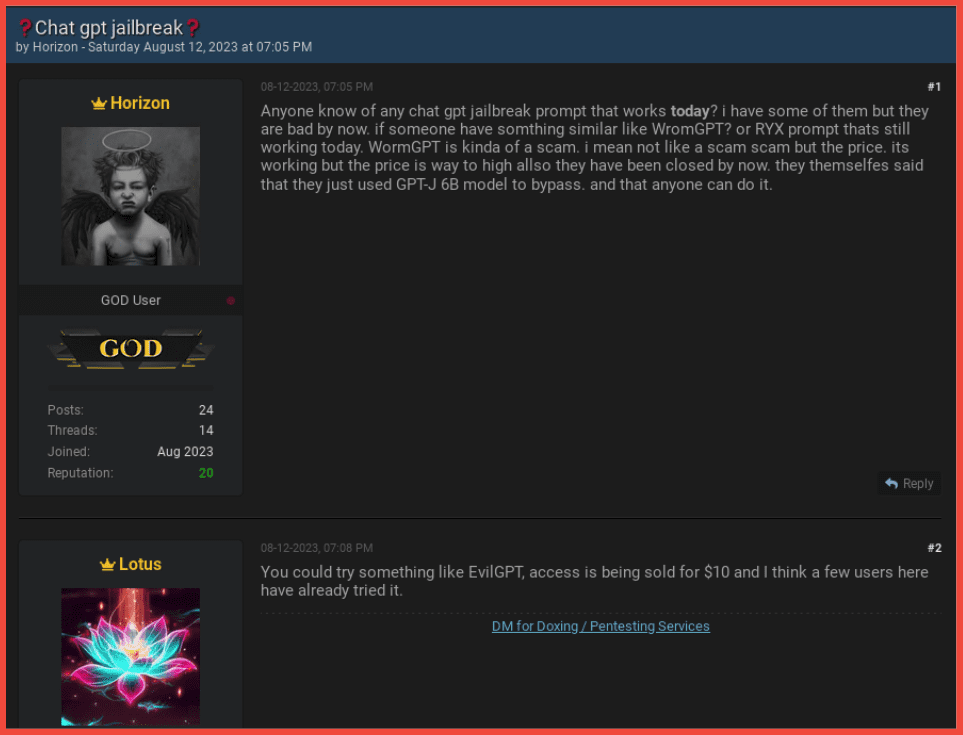

How Cyber Criminals Exploit AI Large Language Models

ChatGPT Jailbreaking Forums Proliferate in Dark Web Communities

PDF] Multi-step Jailbreaking Privacy Attacks on ChatGPT

Computer scientists claim to have discovered 'unlimited' ways to jailbreak ChatGPT - Fast Company Middle East

Universal LLM Jailbreak: ChatGPT, GPT-4, BARD, BING, Anthropic, and Beyond

From ChatGPT to ThreatGPT: Impact of Generative AI in Cybersecurity and Privacy – arXiv Vanity

de

por adulto (o preço varia de acordo com o tamanho do grupo)