Computing GPU memory bandwidth with Deep Learning Benchmarks

Por um escritor misterioso

Descrição

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

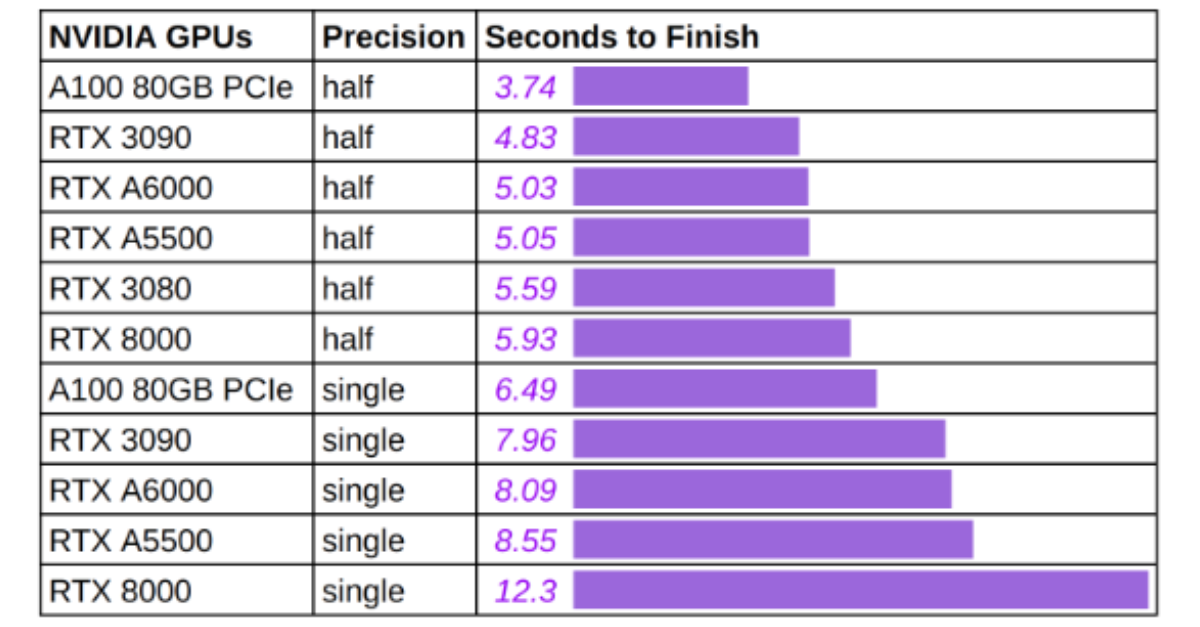

All You Need Is One GPU: Inference Benchmark for Stable Diffusion

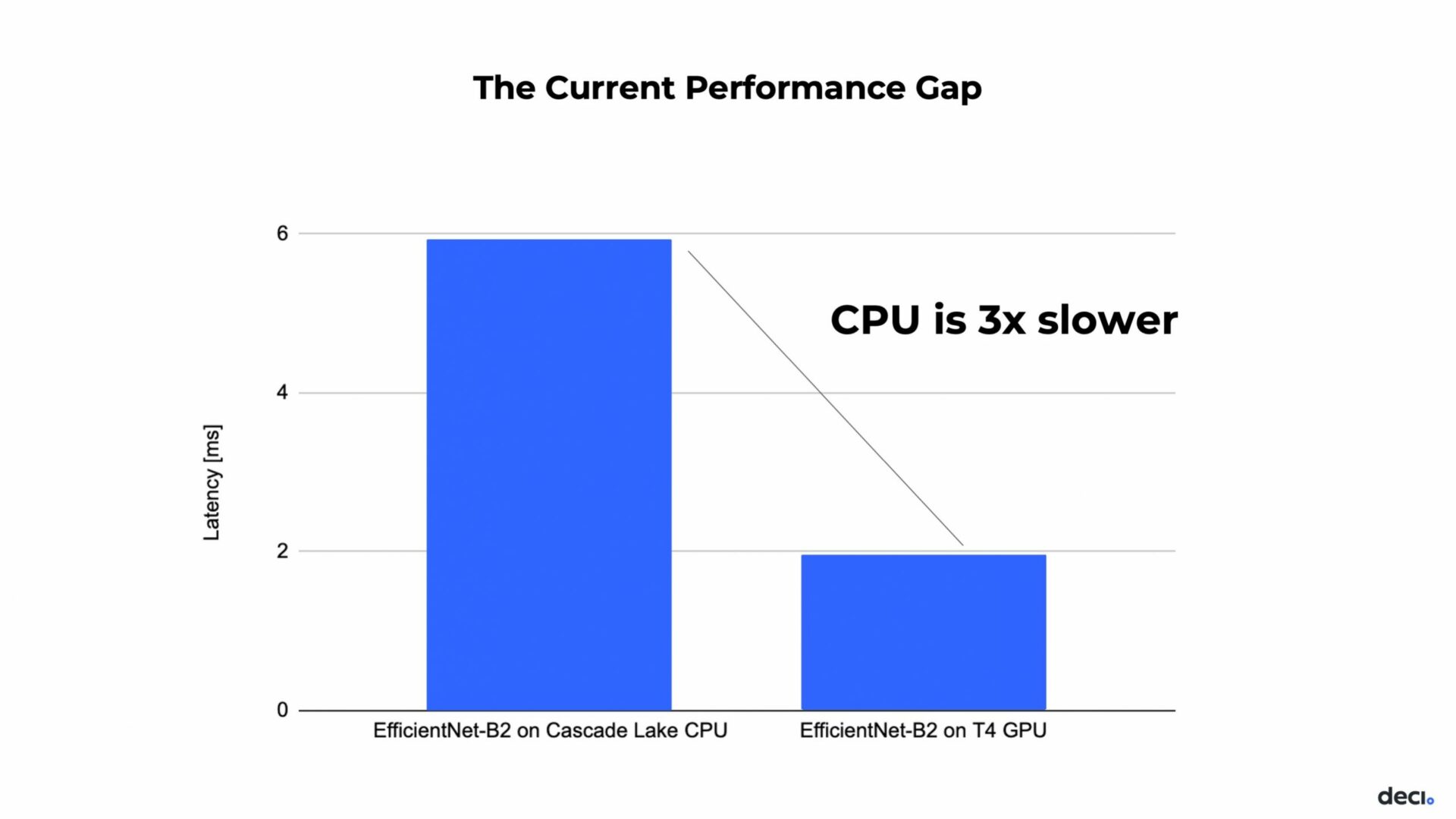

Can You Close the Performance Gap Between GPU and CPU for Deep

GPU Memory Size and Deep Learning Performance (batch size) 12GB vs

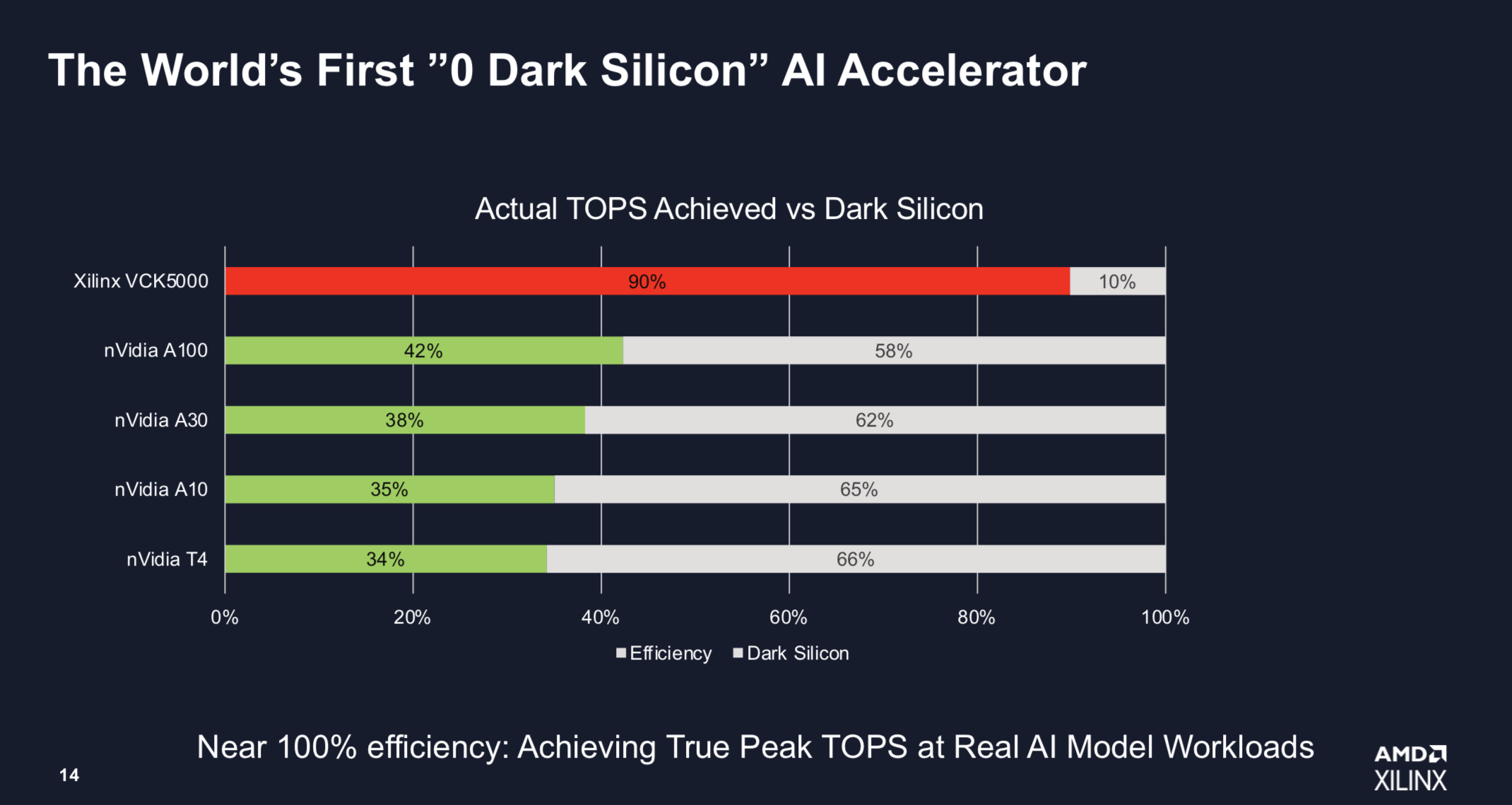

Peak performance and memory bandwidth of multiple NVIDIA GPU

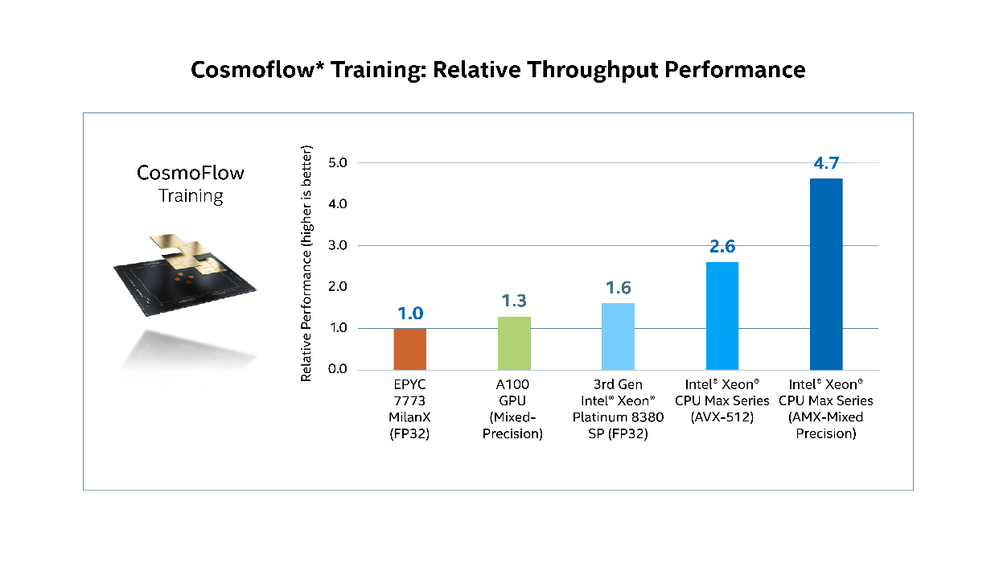

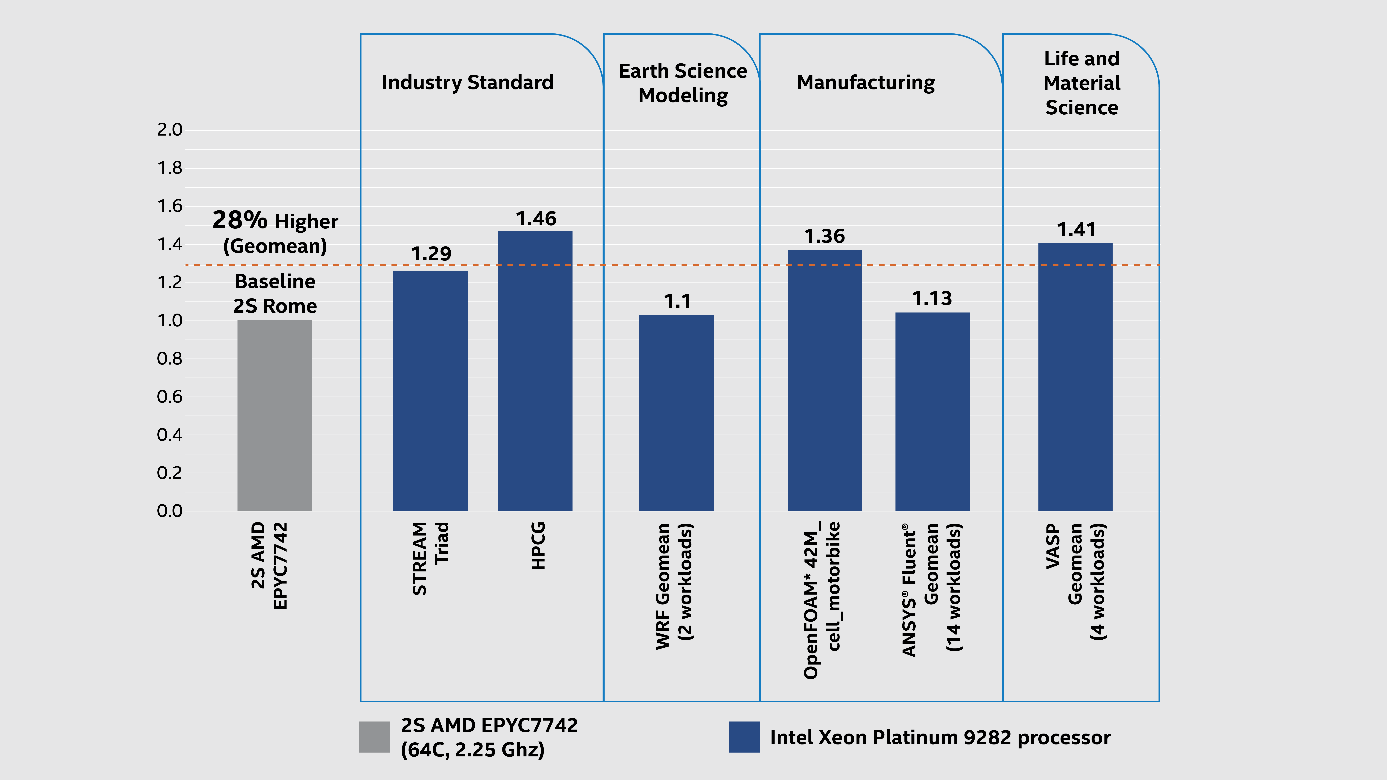

High Bandwidth Memory Can Make CPUs the Desired Platform for AI

How High-Bandwidth Memory Will Break Performance Bottlenecks - The

Why are GPUs well-suited to deep learning? - Quora

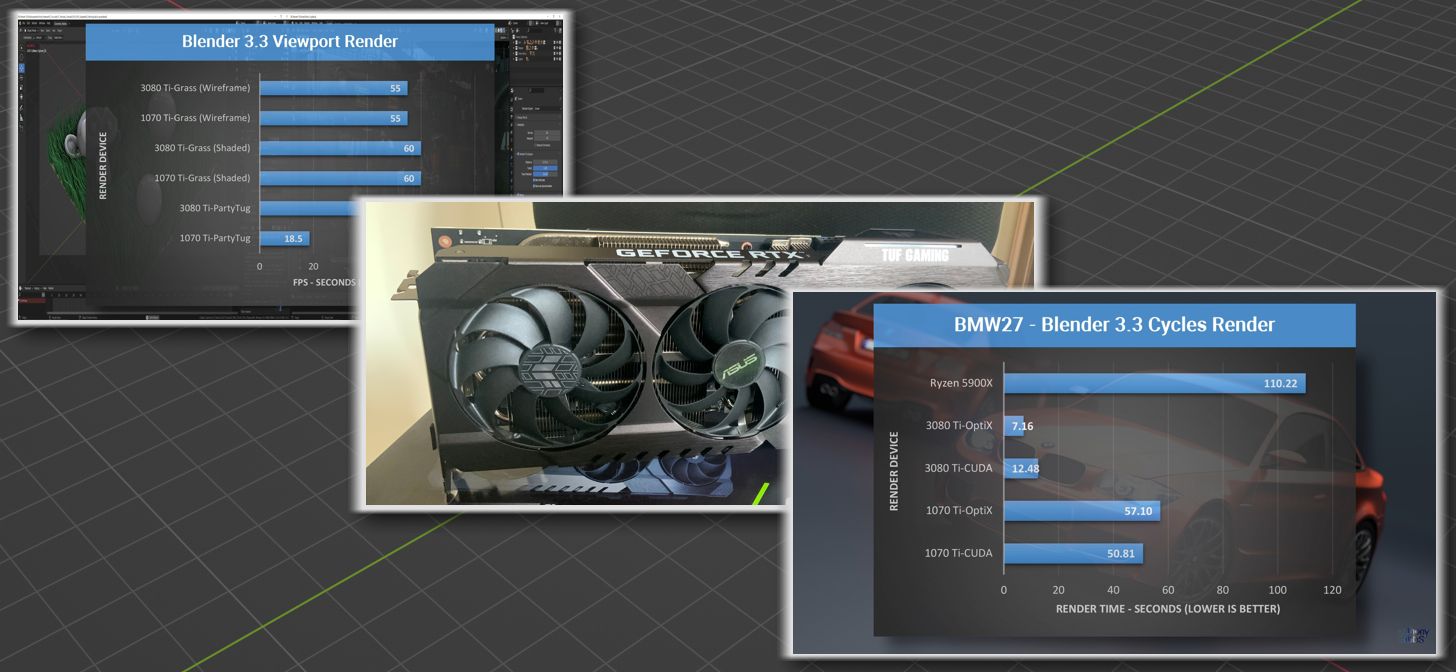

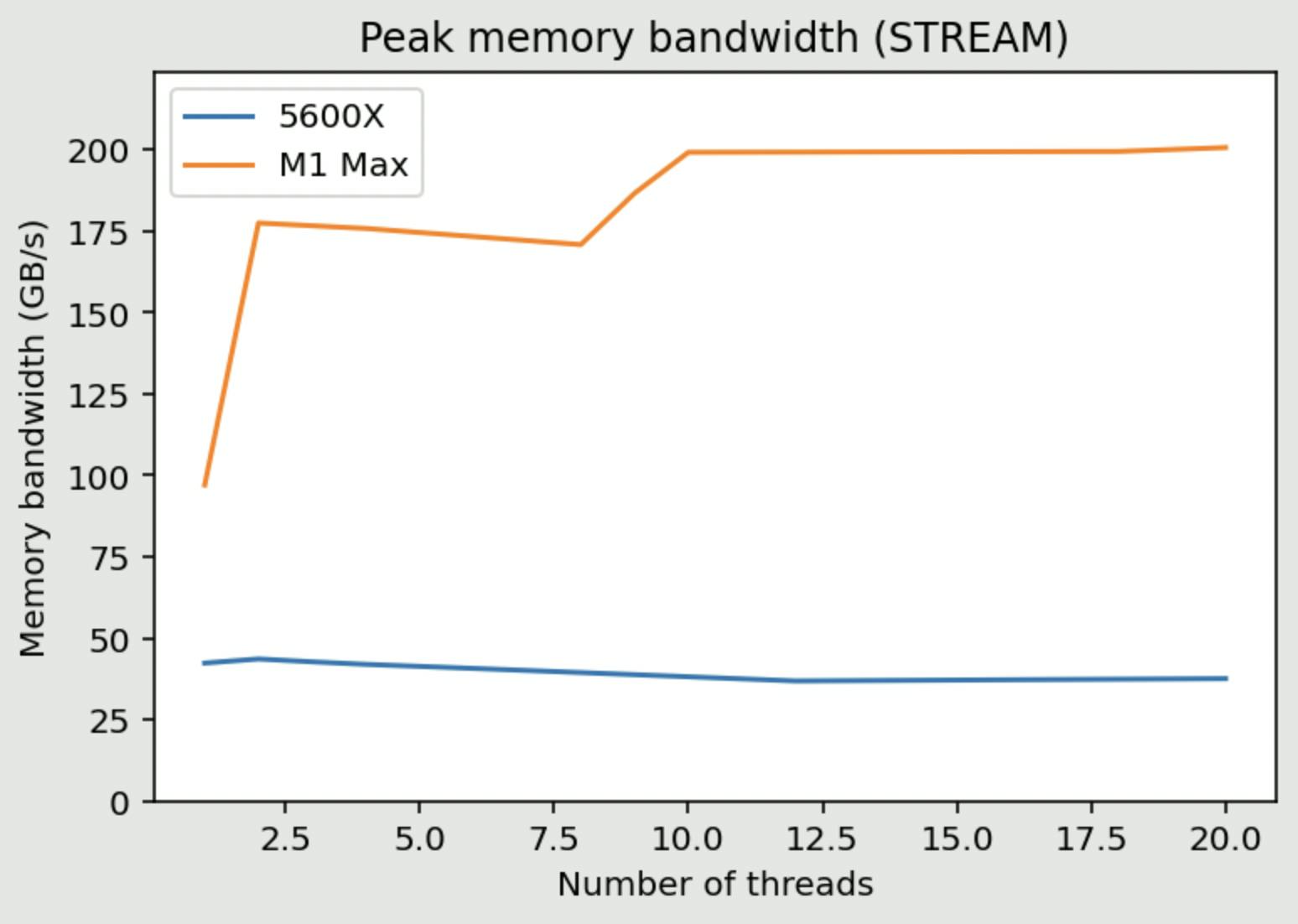

Benchmarking the Apple M1 Max

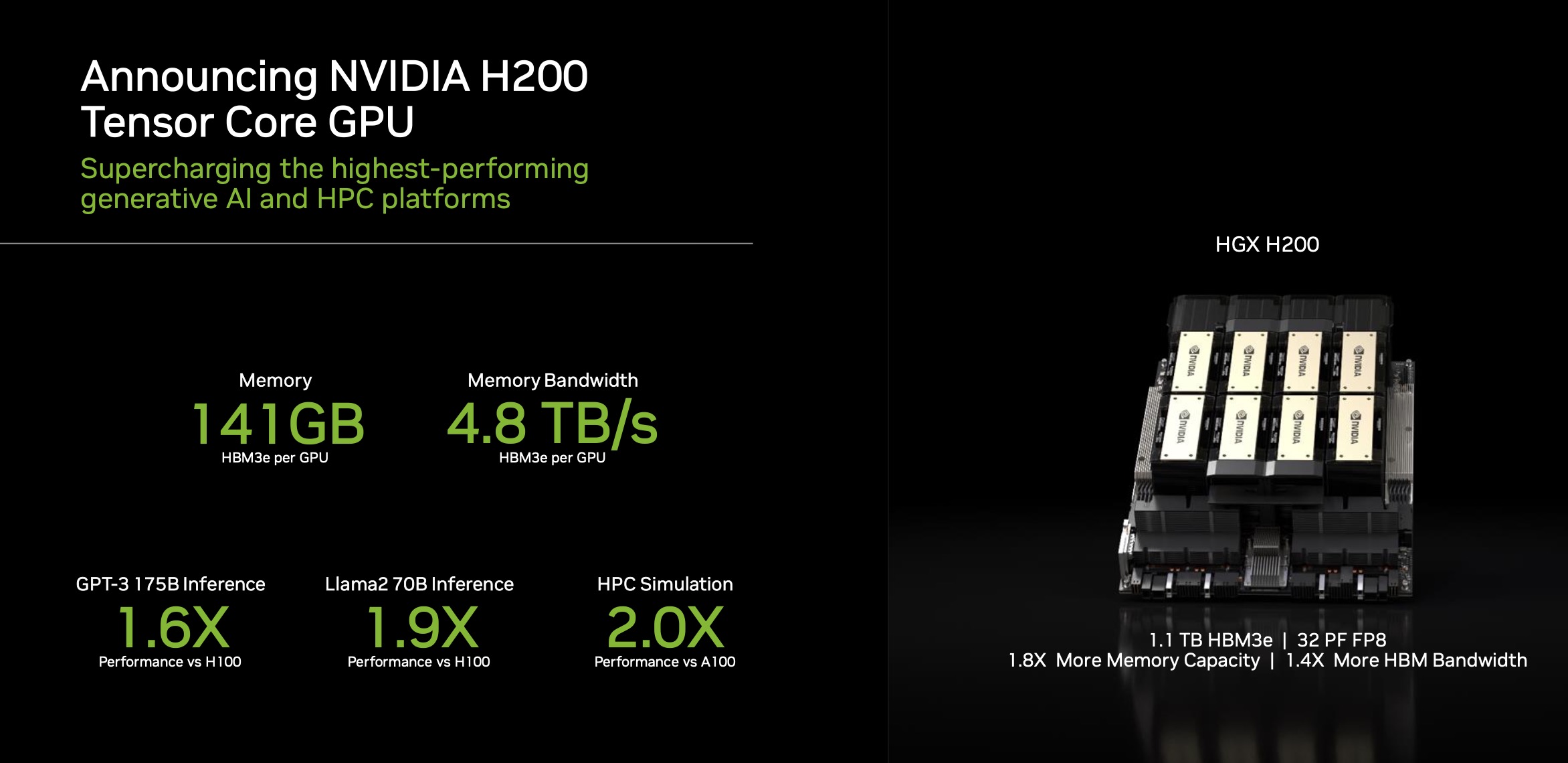

NVIDIA introduces Hopper H200 GPU with 141GB of HBM3e memory

High Bandwidth Memory Can Make CPUs the Desired Platform for AI

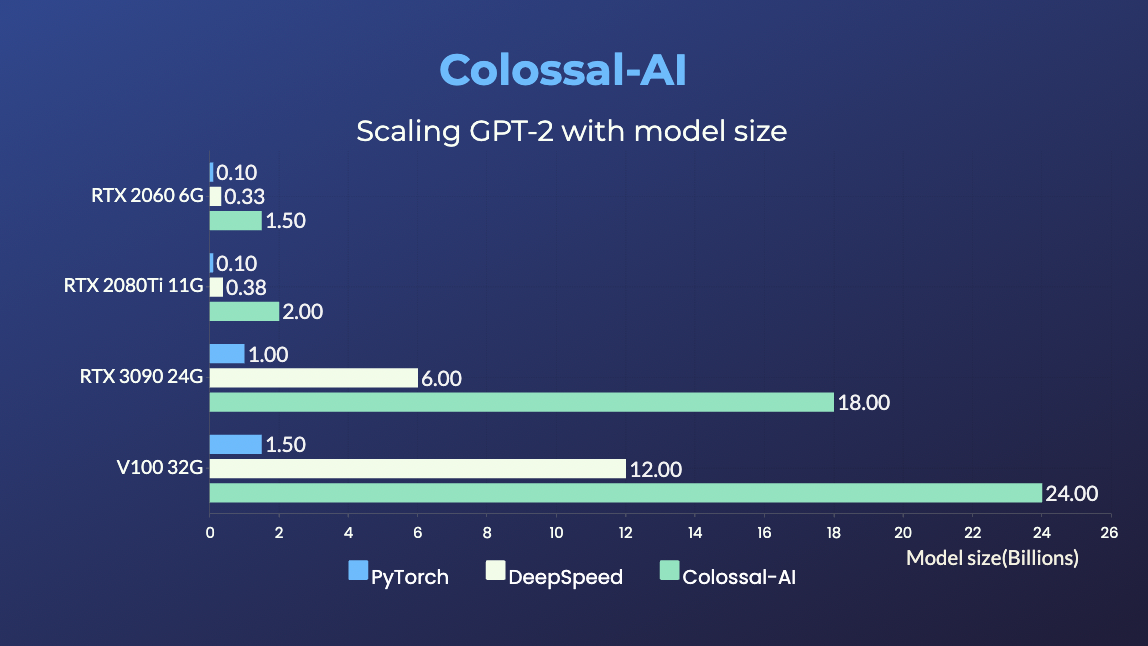

Train 18-billion-parameter GPT models with a single GPU on your

Best GPUs for Machine Learning for Your Next Project

BIDMach: Machine Learning at the Limit with GPUs

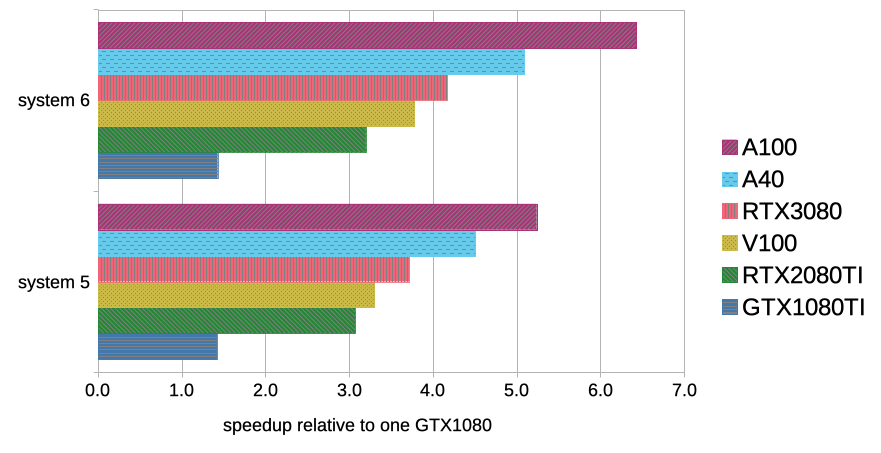

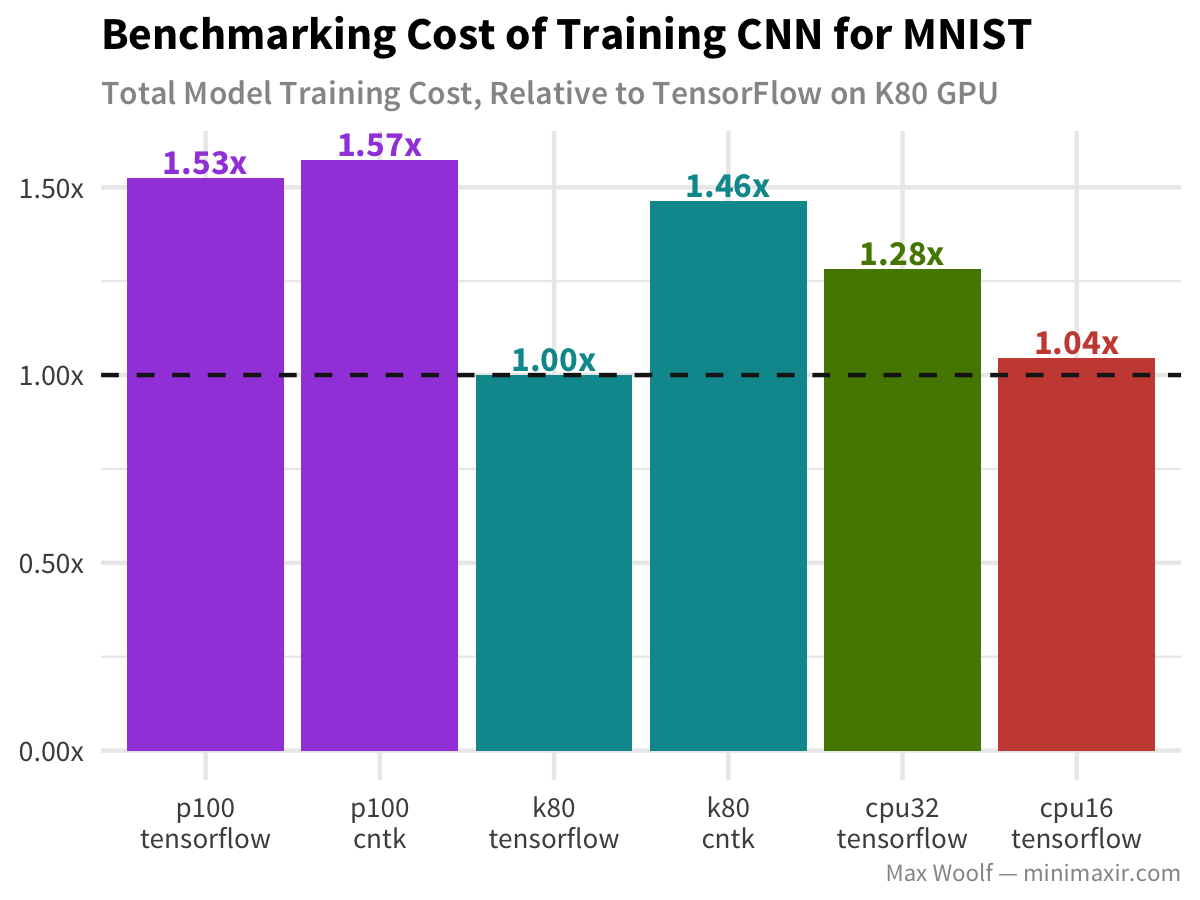

Benchmarking Modern GPUs for Maximum Cloud Cost Efficiency in Deep

de

por adulto (o preço varia de acordo com o tamanho do grupo)